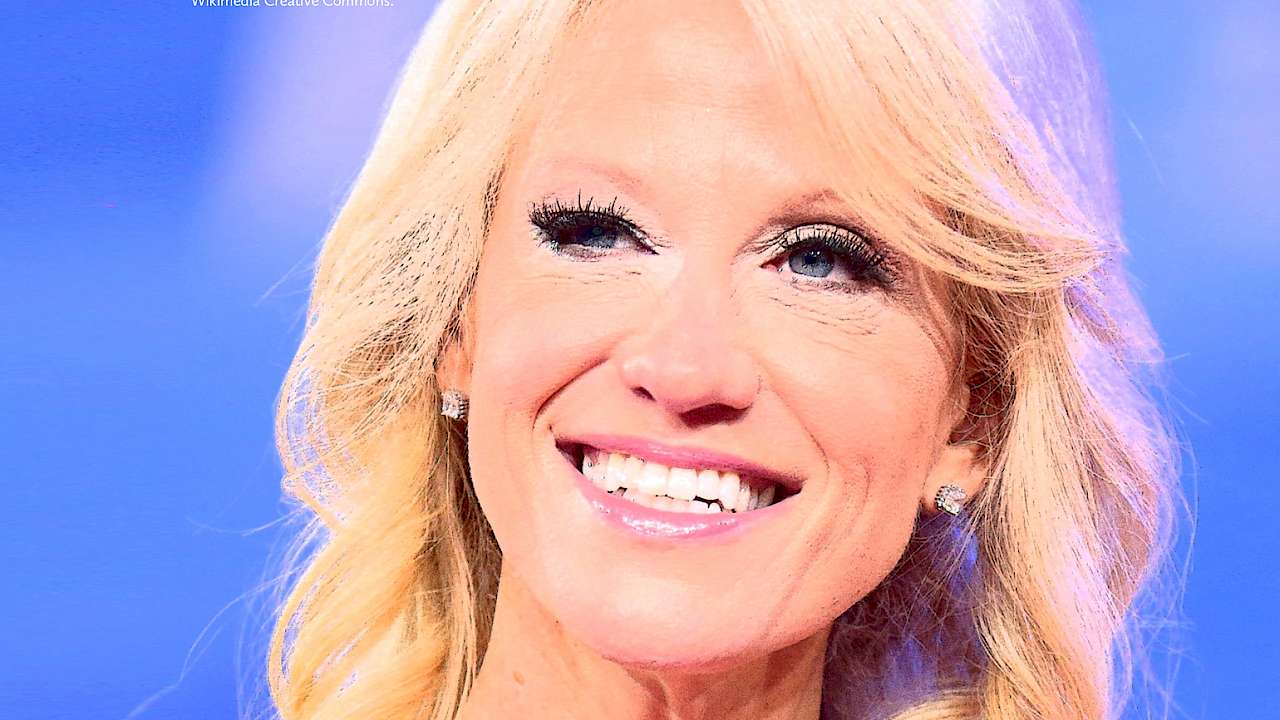

In one survey of 712 American voters, for example, 23% agreed that the ‘Bowling Green Massacre’ – a fictional incident invented by one of President Trump’s key advisers – justified the need for banning immigration from seven predominantly Muslim countries. As this example illustrates, the easy spread of misinformation often means that false beliefs about past events can become widely accepted.

In light of growing concerns about the abundance of misinformation in our physical and online worlds, and about manipulative disinformation campaigns being run by political extremists and powerful foreign governments, misinformation is increasingly viewed as a serious threat to the stability and security of our communities, and of our nations.

As yet, there is no fail-safe inoculation against misinformation, but psychological science is well-positioned to play a central role in this endeavour. Indeed, many hundreds of psychological studies have documented how and when misinformation changes people’s beliefs about past events, and even people’s memories of those events.

In a typical study, participants view some kind of event – a video, perhaps, or a staged ‘crime’ – and later receive written or verbal misinformation about what happened. After a delay, they are then tested on what they remember about the event, with these tests commonly revealing that the misinformation finds its way into people’s honest accounts.

Participants in one recent study, for instance, watched footage from a police officer’s body-worn camera, which depicted the officer striking an unarmed civilian with his baton; participants also read the officer’s report of the incident, which contained many factual errors. When subsequently asked about the incident, participants frequently gave answers that fitted with the officer’s account, despite conflicting with the objective facts they had seen in the footage.

Modern misinformation isn’t always verbal, of course. In recent years, doctored photos have become a prevalent medium of political persuasion, and people sometimes mistakenly treat these images as proof of events that never truly occurred. Like verbal misinformation, deceptive photos can influence what people recall about past public events.

Like verbal misinformation, deceptive photos can influence what people recall about past public events

In one study, Italian participants who briefly saw a photo of a peace protest in Rome – which was doctored to appear far less than peaceful – recalled the event as having been violent, involving many injuries and even deaths. Misinformation is usually most effective when the source seems highly reliable, and so the potency of images like these may lie in their apparent credibility.

However, one recent series of experiments found that even highly unconvincing doctored photos subtly influenced people’s beliefs about major public events. Mirroring many other studies, this finding shows us that people often change their beliefs about the past based not on reasoned argument, but on a momentary feeling that a suggested event seems familiar.

Illusory familiarity of this kind might arise for any number of reasons, such as when misinformation is easy to imagine, or when it has been repeated several times. And one consequence is that even when we can initially resist fake news from untrustworthy sources, it may nevertheless still permeate our memories at a later time when it still feels familiar but we have forgotten where we learned it.

Once one person has accepted verbal or visual misinformation, it can be surprisingly easy to lead others to have the same false beliefs or memories. Numerous studies show, for instance, when two friends discuss a shared experience, which one of them has been misled about, their discussions frequently end with both friends sincerely remembering the misinformation as true.

With these demonstrations in mind, it is clear why distorted accounts of past events can spread so easily within social groups. For this reason, we must be mindful that having two witnesses who agree on what they remember should not always be twice as compelling as one witness.

So, what can we do about the misinformation problem? In terms of social influence, perhaps the most intuitive solution would be simply to challenge misinformation; that is, to correct people’s misconceptions with facts. Insofar that this solution would actually be feasible, it might just work.

Correcting people’s misconceptions with facts can be rather effective in reversing false beliefs

There is growing evidence that – contrary to several demonstrations of ‘backfire effects’ – correcting people’s misconceptions with facts can be rather effective in reversing false beliefs; and in research on memories for past events, telling people they have been exposed to misinformation has often proven sufficient to reduce, albeit not fully reverse its influence.

Explicit warnings of this kind may be particularly effective if they are specific about how the misinformation has been encountered, and why it is incorrect. In short, from these findings, it seems reasonable to conclude that it is not futile to actively challenge and publicly correct misinformation wherever possible.

But even if it were possible to reach every misinformed person and to show them the facts, there are several reasons why this approach alone will often be insufficient. Not least of these is that memory is partisan: people tend to accept misinformation most readily if it supports their worldview and preferences. When seeing a doctored photo of a fictional event that seemed politically damaging for President George W. Bush, for example, American liberals in one study were more likely than conservatives to mistakenly think they remembered the event. Conversely, it was conservatives who were fooled most easily by a doctored photo that seemed damaging for President Obama.

In a world where trust in institutions and public figures are notoriously fragile, people may be more likely to disbelieve the corrective warnings, than to abandon worldview-consistent beliefs that their own memories even corroborate.

Perhaps a longer-term kind of memory inoculation, then, is one that would equip people to be more critical, vigilant consumers of information. Indeed, there is emerging consensus on the importance of improving education around information literacy, through training people to discriminate between information from reliable and unreliable sources and to evaluate suggestions for themselves whilst seeing past their own biases and prejudices.

Further to these skills, though, an equally important skill is ‘source monitoring’: our ability to accurately discriminate what we really saw from what we only heard or thought about.

If we really want to avoid being influenced by misinformation, then we must be both able and willing to actively question the reliability of our own memories and to accept that these, too, might sometimes be fake news.

Read more

- Stephan Lewandowsky, Ullrich K. H.Ecker, Colleen M. Seifert, Norbert Schwarz, John Cook. 2012. Misinformation and its correction: Continued influence and successful debiasing. Psychological Science in the Public Interest, 13 (3): 106-131. Available at: https://goo.gl/48LSKs

- Kristyn A. Jones, William E. Crozier, and Deryn Strange. 2017. Believing is seeing: Biased viewing of body-worn camera footage. Journal of Applied Research in Memory and Cognition, 6: 460-474. Available at: https://goo.gl/cRFMvt

- Robert Nash. 2018. Changing beliefs about past public events with believable and unbelievable doctored photographs. Memory, 26: 439-450. Available at: https://goo.gl/rwAie7

- Fiona Gabbert, Amina Memon and Kevin Allan, K. 2003. Memory conformity: Can eyewitnesses influence each other's memories for an event? Applied Cognitive Psychology, 17, 533-543. Available at: https://goo.gl/4aht3Q

- Elizabeth J. Marsh and Brenda W. Yang. 2017. A call to think broadly about information literacy. Journal of Applied Research in Memory and Cognition, 6, 401-404. Available at: https://goo.gl/LUqKie

Copyright Information

As part of CREST’s commitment to open access research, this text is available under a Creative Commons BY-NC-SA 4.0 licence. Please refer to our Copyright page for full details.