Cyber risk

Academic Publications

Quantifying smartphone “use”: Choice of measurement impacts relationships between “usage” and health

Problematic smartphone scales and duration estimates of use dominate research that considers the impact of smartphones on people and society. However, issues with conceptualization and subsequent measurement can obscure genuine associations between technology use and health. Here, we consider whether different ways of measuring “smartphone use,” notably through problematic smartphone use (PSU) scales, subjective estimates, or objective logs, lead to contrasting associations between mental and physical health. Across two samples including iPhone (n = 199) and Android (n = 46) users, we observed that measuring smartphone interactions with PSU scales produced larger associations between mental health when compared with subjective estimates or objective logs. Notably, the size of the relationship was fourfold in Study 1, and almost three times as large in Study 2, when relying on a PSU scale that measured smartphone “addiction” instead of objective use. Further, in regression models, only smartphone “addiction” scores predicted mental health outcomes, whereas objective logs or estimates were not significant predictors. We conclude that addressing people’s appraisals including worries about their technology usage is likely to have greater mental health benefits than reducing their overall smartphone use. Reducing general smartphone use should therefore not be a priority for public health interventions at this time.

(From the journal abstract)

Shaw, H., Ellis, D. A., Geyer, K., Davidson, B. I., Ziegler, F. V., & Smith, A. (2020). Quantifying smartphone “use”: Choice of measurement impacts relationships between “usage” and health. Technology, Mind, and Behavior, 1(2).

Understanding the Psychological Process of Avoidance-Based Self-Regulation on Facebook

In relation to social network sites, prior research has evidenced behaviors (e.g., censoring) enacted by individuals used to avoid projecting an undesired image to their online audiences. However, no work directly examines the psychological process underpinning such behavior. Drawing upon the theory of self-focused attention and related literature, a model is proposed to fill this research gap. Two studies examine the process whereby public self-awareness (stimulated by engaging with Facebook) leads to a self-comparison with audience expectations and, if discrepant, an increase in social anxiety, which results in the intention to perform avoidance-based self-regulation. By finding support for this process, this research contributes an extended understanding of the psychological factors leading to avoidance-based regulation when online selves are subject to surveillance.

(From the journal abstract)

Marder, B., Houghton, D., Joinson, A., Shankar, A., & Bull, E. (2016). Understanding the Psychological Process of Avoidance-Based Self-Regulation on Facebook. Cyberpsychology, Behavior, and Social Networking, 19(5), 321–327.

Measurement practices exacerbate the generalizability crisis: Novel digital measures can help

Psychology’s tendency to focus on confirmatory analyses before ensuring constructs are clearly defined and accurately measured is exacerbating the generalizability crisis. Our growing use of digital behaviors as predictors has revealed the fragility of subjective measures and the latent constructs they scaffold. However, new technologies can provide opportunities to improve conceptualizations, theories, and measurement practices.

(From the journal abstract)

Davidson, B. I., Ellis, D. A., Stachl, C., Taylor, P., & Joinson, A. (2021). Measurement practices exacerbate the generalizability crisis: Novel digital measures can help [Preprint]. PsyArXiv.

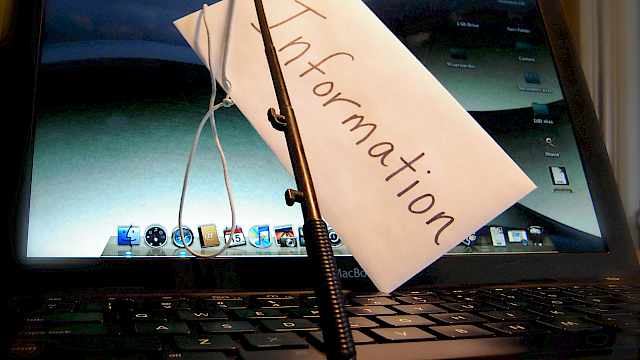

Exploring Susceptibility to Phishing in the Workplace

Phishing emails provide a means to infiltrate the technical systems of organisations by encouraging employees to click on malicious links or attachments. Despite the use of awareness campaigns and phishing simulations, employees remain vulnerable to phishing emails. The present research uses a mixed methods approach to explore employee susceptibility to targeted phishing emails, known as spear phishing. In study one, nine spear phishing simulation emails sent to 62,000 employees over a six-week period were rated according to the presence of authority and urgency influence techniques. Results demonstrated that the presence of authority cues increased the likelihood that a user would click a suspicious link contained in an email. In study two, six focus groups were conducted in a second organisation to explore whether additional factors within the work environment impact employee susceptibility to spear phishing. We discuss these factors in relation to current theoretical approaches and provide implications for user communities.

Highlights

- Susceptibility to phishing emails is explored in an ecologically valid setting.

- Authority and urgency techniques are found to impact employee susceptibility.

- Context-specific factors are also likely to impact employee susceptibility.

- A range of targeted initiatives are required to address susceptibility factors.

(From the journal abstract)

Emma Williams, Joanne Hinds, and Adam N. Joinson. 2018. ‘Exploring Susceptibility to Phishing in the Workplace’. International Journal of Human-Computer Studies, 120 (December): 1–13. https://doi.org/10.1016/j.ijhcs.2018.06.004.

Digital Hoarding Behaviours: Measurement and Evaluation

The social and psychological characteristics of individuals who hoard physical items are quite well understood, however very little is known about the psychological characteristics of those who hoard digital items and the kinds of material they hoard. In this study, we designed a new questionnaire (Digital Behaviours Questionnaire: DBQ) comprising 2 sections: the Digital Hoarding Questionnaire (DHQ) assessing two key components of physical hoarding (accumulation and difficulty discarding); and the second measuring the extent of digital hoarding in the workplace (Digital Behaviours in the Workplace Questionnaire: DBWQ).

In an initial study comprising 424 adults we established the psychometric properties of the questionnaires. In a second study, we presented revised versions of the questionnaires to a new sample of 203 adults, and confirmed their validity and reliability. Both samples revealed that digital hoarding was common (with emails being the most commonly hoarded items) and that hoarding behaviours at work could be predicted by the 10 item DHQ. Digital hoarding was significantly higher in employees who identified as having ‘data protection responsibilities’, suggesting that the problem may be influenced by working practices. In sum, we have validated a new psychometric measure to assess digital hoarding, documented some of its psychological characteristics, and shown that it can predict digital hoarding in the workplace.

(From the journal abstract)

Nick Neave, Pam Briggs, Kerry McKellar, and Elizabeth Sillence. 2019. ‘Digital Hoarding Behaviours: Measurement and Evaluation’. Computers in Human Behavior, 96 (July): 72–77. https://doi.org/10.1016/j.chb.2019.01.037.

Individual Differences in Susceptibility to Online Influence: A Theoretical Review

Scams and other malicious attempts to influence people are continuing to proliferate across the globe, aided by the availability of technology that makes it increasingly easy to create communications that appear to come from legitimate sources. The rise in integrated technologies and the connected nature of social communications means that online scams represent a growing issue across society, with scammers successfully persuading people to click on malicious links, make fraudulent payments, or download malicious attachments.

However, current understanding of what makes people particularly susceptible to scams in online contexts, and therefore how we can effectively reduce potential vulnerabilities, is relatively poor. So why are online scams so effective? And what makes people particularly susceptible to them? This paper presents a theoretical review of literature relating to individual differences and contextual factors that may impact susceptibility to such forms of malicious influence in online contexts.

A holistic approach is then proposed that provides a theoretical foundation for research in this area, focusing on the interaction between the individual, their current context, and the influence message itself, when considering likely response behaviour.

(From the journal abstract)

Williams, Emma J., Amy Beardmore, and Adam N. Joinson. 2017. ‘Individual Differences in Susceptibility to Online Influence: A Theoretical Review’. Computers in Human Behavior 72 (July): 412–21. https://doi.org/10.1016/j.chb.2017.03.002.

Press Accept to Update Now: Individual Differences in Susceptibility to Malevolent Interruptions

Increasingly, connected communication technologies have resulted in people being exposed to fraudulent communications by scammers and hackers attempting to gain access to computer systems for malicious purposes. Common influence techniques, such as mimicking authority figures or instilling a sense of urgency, are used to persuade people to respond to malevolent messages by, for example, accepting urgent updates. An ‘accept’ response to a malevolent influence message can result in severe negative consequences for the user and for others, including the organisations they work for.

This paper undertakes exploratory research to examine individual differences in susceptibility to fraudulent computer messages when they masquerade as interruptions during a demanding memory recall primary task compared to when they are presented in a post-task phase. A mixed-methods approach was adopted to examine when and why people choose to accept or decline three types of interrupting computer update message (genuine, mimicked, and low authority) and the relative impact of such interruptions on performance of a serial recall memory primary task.

Results suggest that fraudulent communications are more likely to be accepted by users when they interrupt a demanding memory-based primary task, that this relationship is impacted by the content of the fraudulent message, and that influence techniques used in fraudulent communications can over-ride authenticity cues when individuals decide to accept an update message. Implications for theories, such as the recently proposed Suspicion, Cognition and Automaticity Model and the Integrated Information Processing Model of Phishing Susceptibility, are discussed.

(From the journal abstract)

Williams, Emma J., Phillip L. Morgan, and Adam N. Joinson. 2017. ‘Press Accept to Update Now: Individual Differences in Susceptibility to Malevolent Interruptions’. Decision Support Systems 96 (April): 119–29. https://doi.org/10.1016/j.dss.2017.02.014.

Employees: The Front Line in Cyber Security

What happens if you lose trust in the systems on which you rely? If the displays and dashboards tell you everything is operating normally but, with your own eyes, you can see that this is not the case? This is what apparently happened with the Stuxnet virus attack on the Iranian nuclear programme in 2010.

Dr Debi Ashenden, CREST lead on protective security and risk assessment, writes that with cyber attacks set to rise, it’s important that we empower employees to defend our front line.

(From the journal abstract)

Ashenden, Debi. 2017. ‘Employees: The Front Line in Cyber Security’. The Chemical Engineer, February 2017, 908 edition. https://crestresearch. ac.uk/comment/employees-front-line-cyber-security/.

Radicalization, the Internet and Cybersecurity: Opportunities and Challenges for HCI

The idea that the internet may enable an individual to become radicalized has been of increasing concern over the last two decades. Indeed, the internet provides individuals with an opportunity to access vast amounts of information and to connect to new people and new groups.

Together, these prospects may create a compelling argument that radicalization via the internet is plausible. So, is this really the case? Can viewing ‘radicalizing’ material and interacting with others online actually cause someone to subsequently commit violent and/or extremist acts? In this article, we discuss the potential role of the internet in radicalization and relate to how cybersecurity and certain HCI ‘affordances’ may support it.

We focus on how the design of systems provides opportunities for extremist messages to spread and gain credence, and how an application of HCI and user-centered understanding of online behavior and cybersecurity might be used to counter extremist messages.

By drawing upon existing research that may be used to further understand and address internet radicalization, we discuss some future research directions and associated challenges.

(From the journal abstract)

Hinds, Joanne, and Adam Joinson. 2017. 'Radicalization, the Internet and Cybersecurity: Opportunities and Challenges for HCI'. In Human Aspects of Information Security, Privacy and Trust, 481–93. Lecture Notes in Computer Science. Springer, Cham. https://researchportal.bath.ac.uk/en/publications/radicalization-the-internet-and-cybersecurity-opportunities-and-c